Summary

- Gemini and Apple Intelligence are attempts to address the interest in generative AI.

- Apple Intelligence is focused on offering on-device AI focused on image generation and summaries.

- Gemini is more flexible and more powerful for working with text and other files.

Al is everywhere, but if you set aside the average app’s addition of a chatbot or some kind of summary tool, the most important AI features you encounter are the ones already built in to the phones, tablets, laptops, and services you use every day.

If that gadget is a modern Android device or the most popular Google productivity apps, it’ll be Gemini. If it’s any Apple device running an A17 Pro and up or an M1 and up, it’s Apple Intelligence. As AI experiences, Gemini and Apple Intelligence have some pretty big differences, but they share enough goals in what they want to enable customers to do that they can be compared. Only one AI can come out on top, however, so here’s how Gemini and Apple Intelligence compare.

Related

I tested Gemini Advanced against Copilot Pro — here’s how each AI did

Which $20-a-month AI subscription offers more capabilities, Gemini Advanced or Copilot Pro? The answer may surprise you.

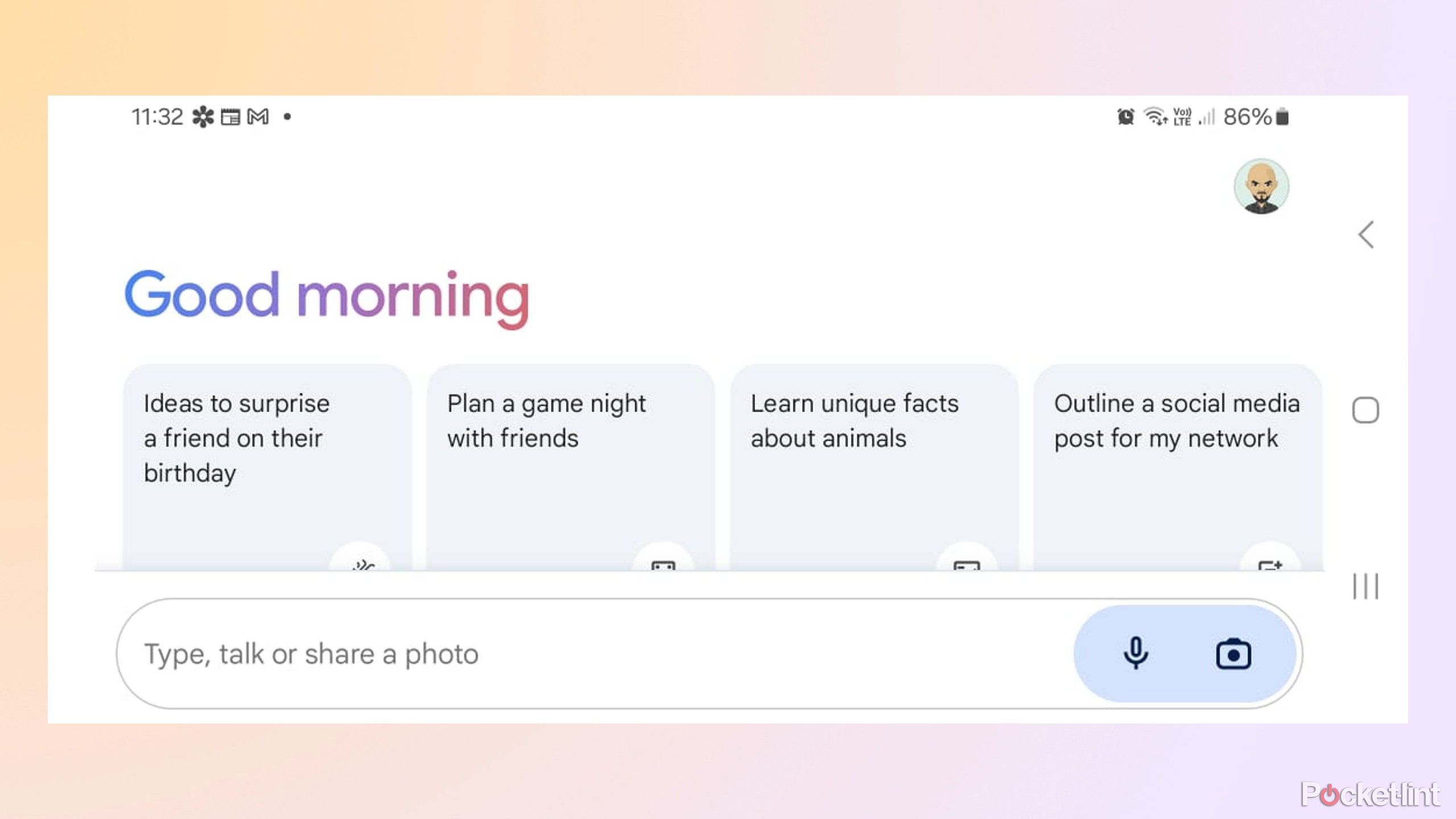

Gemini and Apple Intelligence answer the growing interest in generative AI

Gemini is another name for Google’s generative AI experiences

Gemini debuted to the public as an experimental Google chatbot called Bard in March 2023. At the time of its release, Bard was seen as a quick bit of catch-up to not seem completely behind OpenAI, which had released ChatGPT, its own multipurpose chatbot, near the end of 2022. ChatGPT exploded in popularity in 2023, and Bard was Google’s answer to the natural language conversations and general knowledge ChatGPT was able to spit out.

Bard was developed over time and gained new skills, primarily as extensions that let it interface with Google’s services, but it was ultimately replaced by Gemini in February 2024. Gemini was a much more cohesive vision of Google’s AI and even more functional when it comes to multimodal inputs that go beyond text, like audio files, images, or videos.

Apple Intelligence is a combination of different AI tools

Apple

Like Google, Apple has been integrating machine learning across its hardware and software for years, most visibly for the average user in its camera app. The company was first rumored to be working on its own generative AI model called “Ajax” in 2023, the first hint that Apple felt like it needed to have something to say on AI. Apple Intelligence was ultimately announced in June 2024 as more of a suite of different products and an approach to Al, rather than a single chatbot-centered experience.

Apple Intelligence covers everything from Private Cloud Compute, Apple’s approach to processing AI requests in the cloud securely, to something like an improved Siri that can understand the context of your screen and take actions in apps on your behalf. Gemini and Apple Intelligence are different products, but there are areas where they interact with the average person in similar ways.

Related

3 new Apple TV 4K features you’ll want to check out in the new tvOS update

A new tvOS update has arrived for Apple TV 4K owners.

Answering general questions

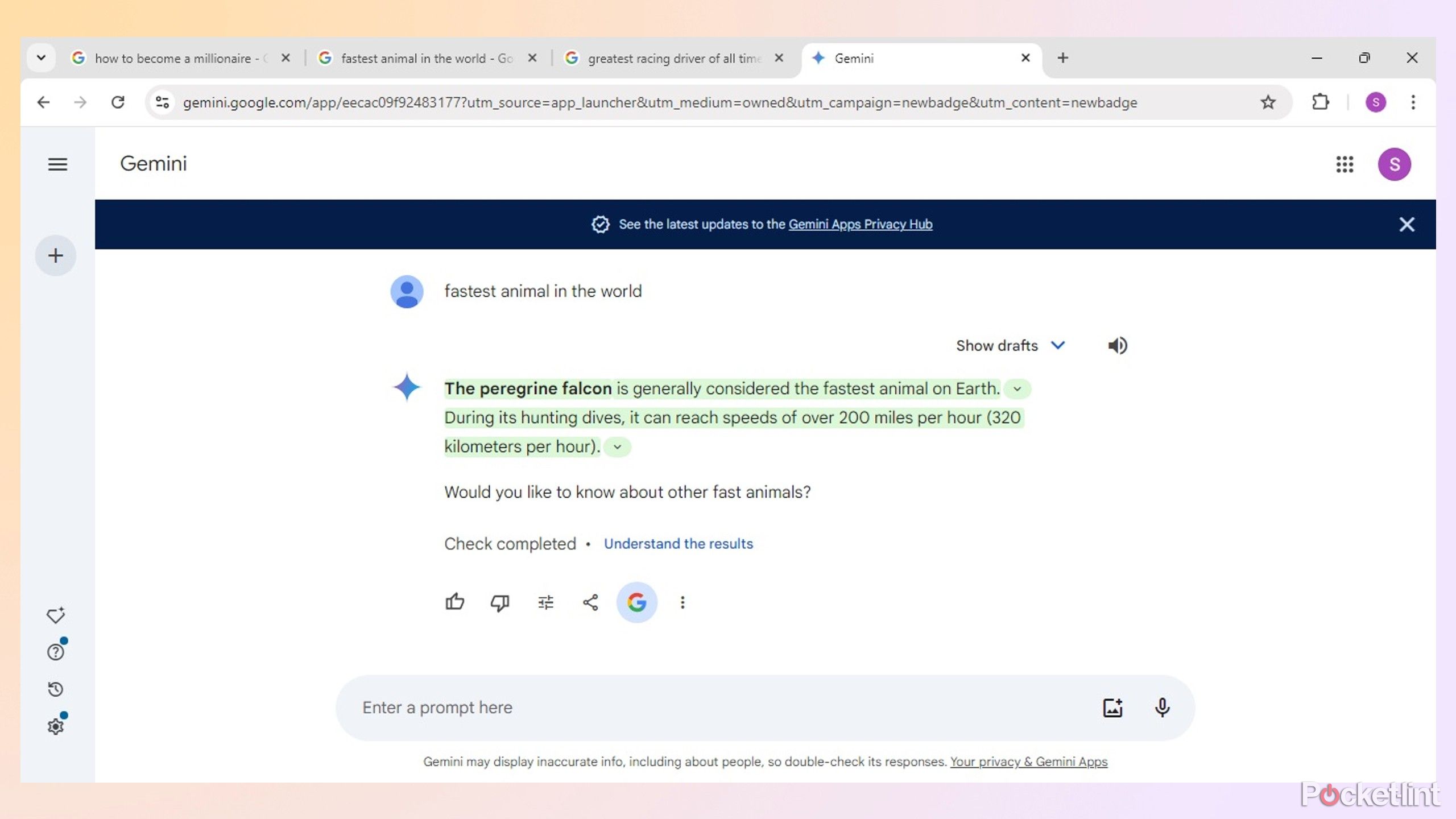

Gemini has an answer for everything, even if it’s wrong

Gemini works as a general-purpose answer machine, pulling information and links from Google searches and connecting the dots with the knowledge it’s been trained on. In my experience, the strength of Gemini is the kind of questions you can ask it. You can ask complex, multipart requests in natural, colloquial language that it basically always takes in stride. Unless what you’re asking for is one of a few subjects or types of requests Google doesn’t let the AI work with, it will always try and answer.

The problem is the quality of the answers can really vary. When Gemini provides links as citations, essentially backup for its answers, I generally feel more confident that it’s gotten an answer right. These links also make it a lot easier to check its work. Google has built-in a button you can press that will automatically open a Google Search for your request to Gemini, but I’ve found that even that small amount of effort can be a turn off. I’d much rather click a link to a direct source.

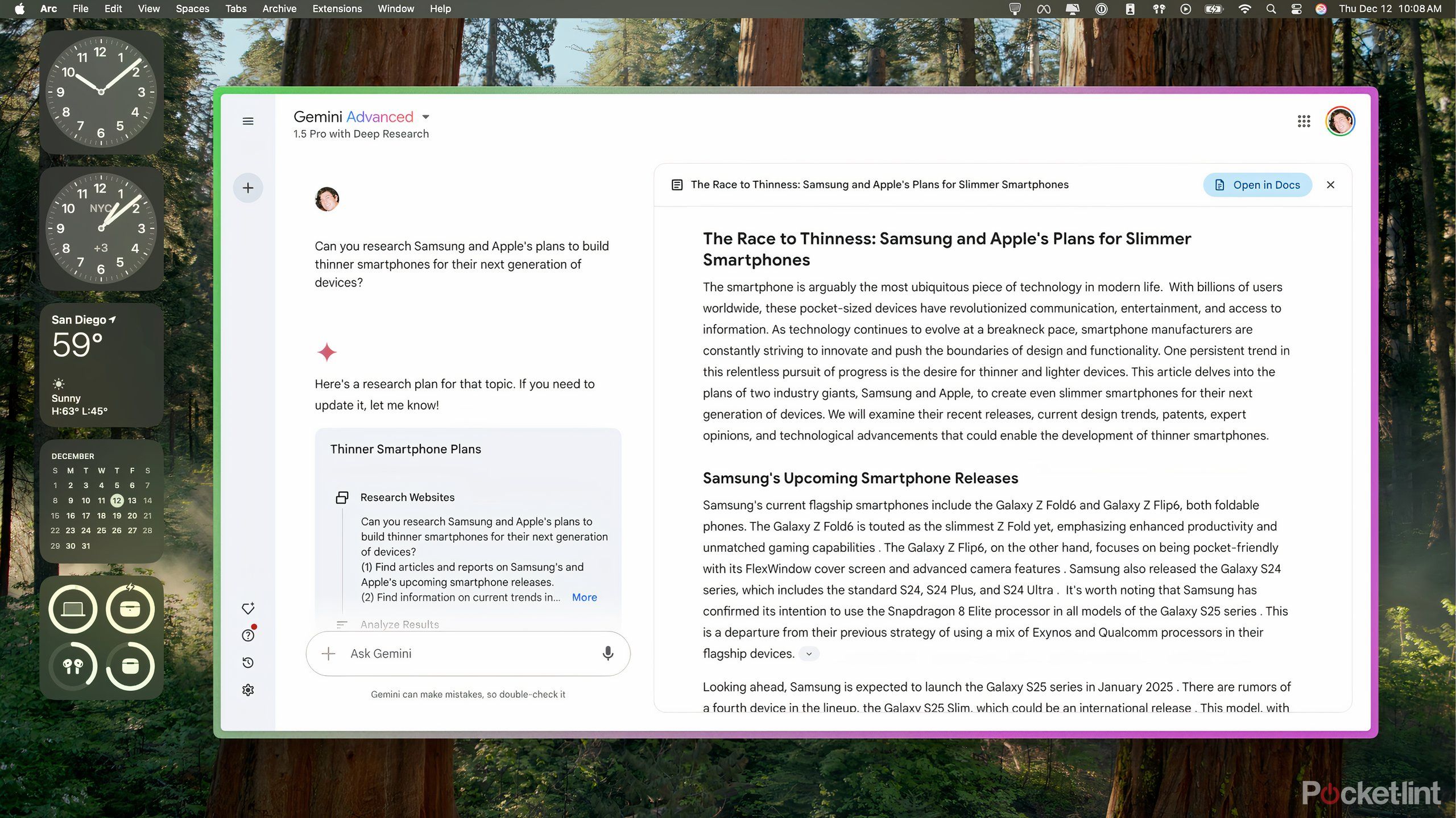

When Gemini answers directly, I’m a lot less inclined to use or rely on the answer. I’ve caught Gemini answering incorrectly or incompletely before, and I’ve likely missed it making mistakes entirely other times. Google recently added “Deep Research” tool can make catching these mistakes even more important. The Gemini feature lets the AI outline a research plan, read through webpages, and then produce a research paper of information and charts on a subject. Unless you’re willing to comb through multiple paragraphs of information, you really can’t trust it, though. I’ve already caught Deep Research sending me a chart with incorrect data, something I wouldn’t have noticed if I wasn’t already familiar with the information I was having Gemini look at.

The bigger issue I had was that Gemini misunderstood my questions on more than one occasion, which required rephrasing and new questions to get the AI back on track answering at all. That’s a lot of wrangling for a tool that’s supposed to save you time.

Siri is more understanding, but not any smarter

Apple

For Apple Intelligence, Siri is the closest Apple comes to a chatbot that can answer questions across iOS, watchOS, iPadOS, and macOS. It’s historically not been that competent at doing much more than telling you what the weather is and setting timers, but Apple Intelligence is supposed to improve things. As part of the first wave of Apple Intelligence upgrades, Siri is more accepting of stumbles and multipart questions. If you can’t make your request out loud, you can also type to Apple’s assistant.

Over the last few years, Siri has slowly gotten better at pulling answers from the web to answer general questions, on top of its connection to Wikipedia, but it’s nowhere near as flexible as Gemini. Apple will still show you a bunch of web results if the question is too specific or complicated.

For Apple Intelligence, Siri is the closest Apple comes to a chatbot that can answer questions across iOS, watchOS, iPadOS, and macOS.

The recent release of iOS 18.2 (and corresponding updates on Apple’s other platforms) gives Siri a connection to ChatGPT for answering more complicated questions, which vastly expands the AI’s abilities. Unfortunately, that also introduces unreliability that wasn’t really present in Siri before. Eventually, Apple plans to give developers the ability to connect Siri to their apps in the functions and data inside, but it’s not clear when that will be available. Overall, Gemini is better at answering questions than Siri, but you can probably trust Siri a bit more.

Related

The tinyPod made me realize I like the Apple Watch a lot more than I thought

The iPod-shaped case reveals your smartwatch as the phone it always was.

Generating professional and creative text

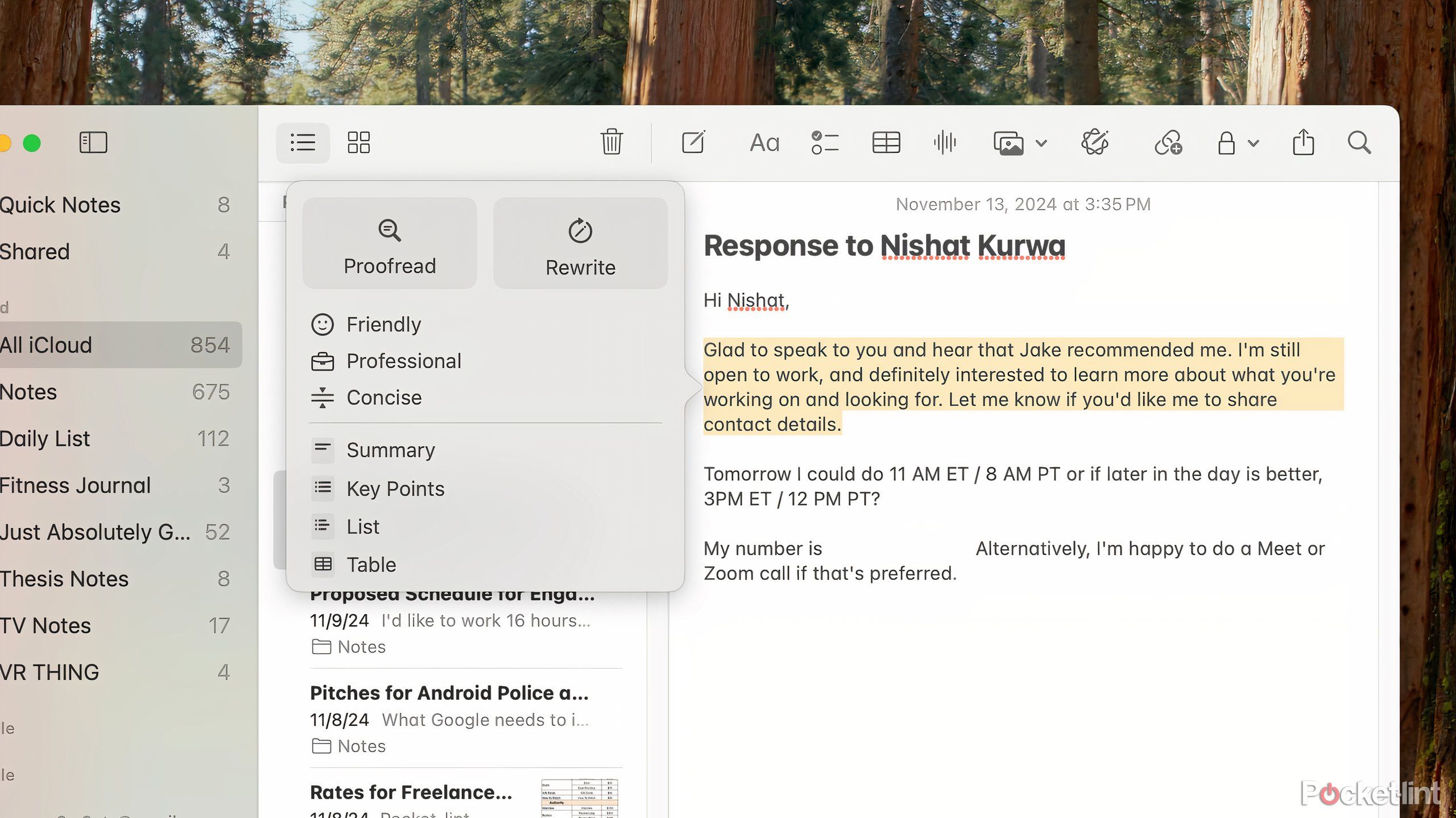

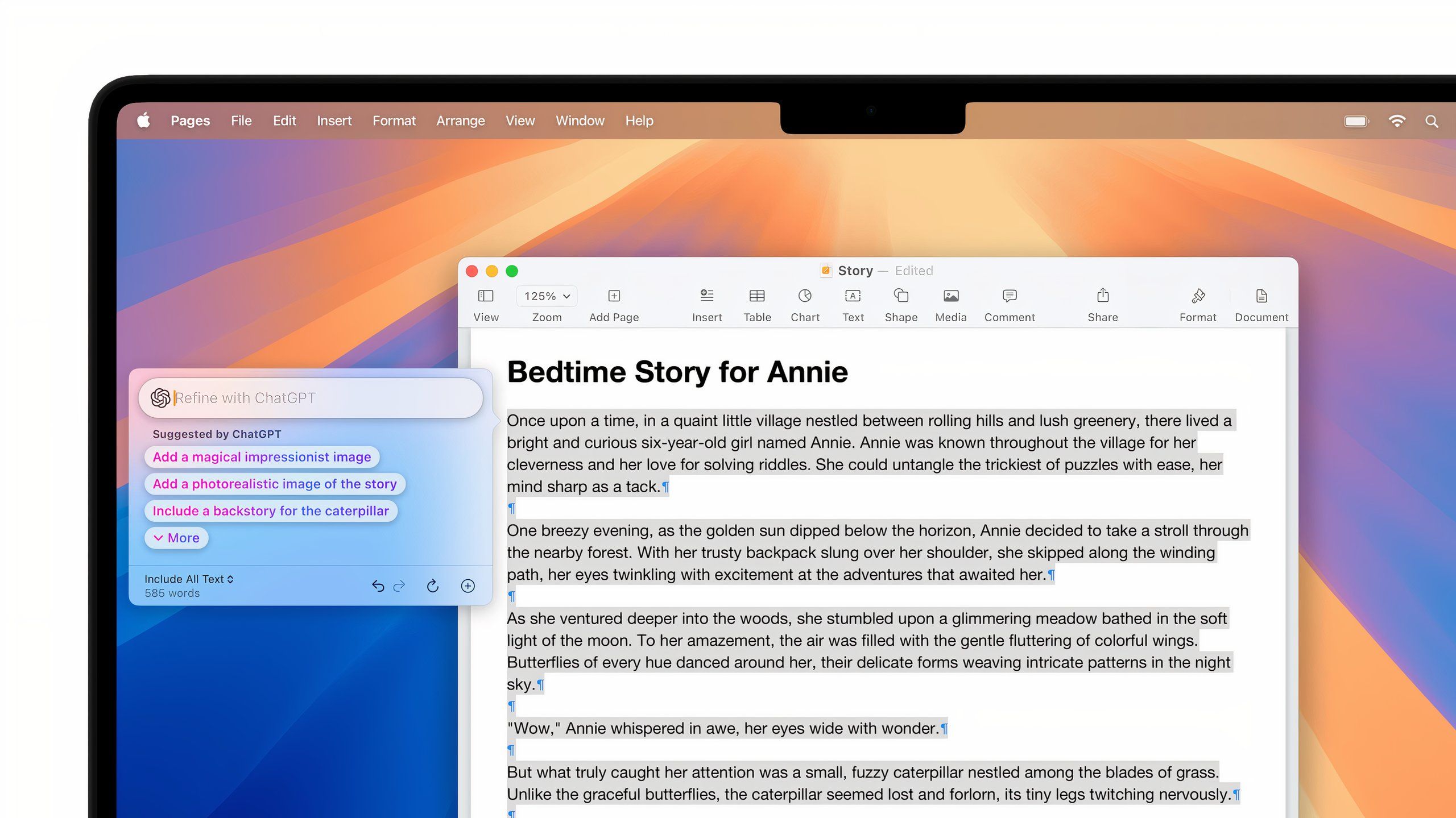

Apple Intelligence can act as a levelled-up spellcheck

Writing Tools is Apple Intelligence’s text generation and editing feature that’s accessible across iOS, iPadOS, and macOS. It’s focused on proofreading and rewriting existing text and only generating new material if it has something to start from. Writing Tools can also call on ChatGPT to generate entirely new text based on a prompt, which works just as well as using the ChatGPT app or website.

I’ve found Writing Tools can sometimes rewrite text to be “friendly” in a corny way or make a “professional” email overly formal, but its proofreading skills are helpful as a second pair of eyes on writing. It’s worth noting that some apps on macOS can obscure access to Writing Tools, which makes using the tool more complicated than it is on iOS or iPadOS where Apple exerts more control. On the whole, though, it’s a harmless, occasionally helpful feature.

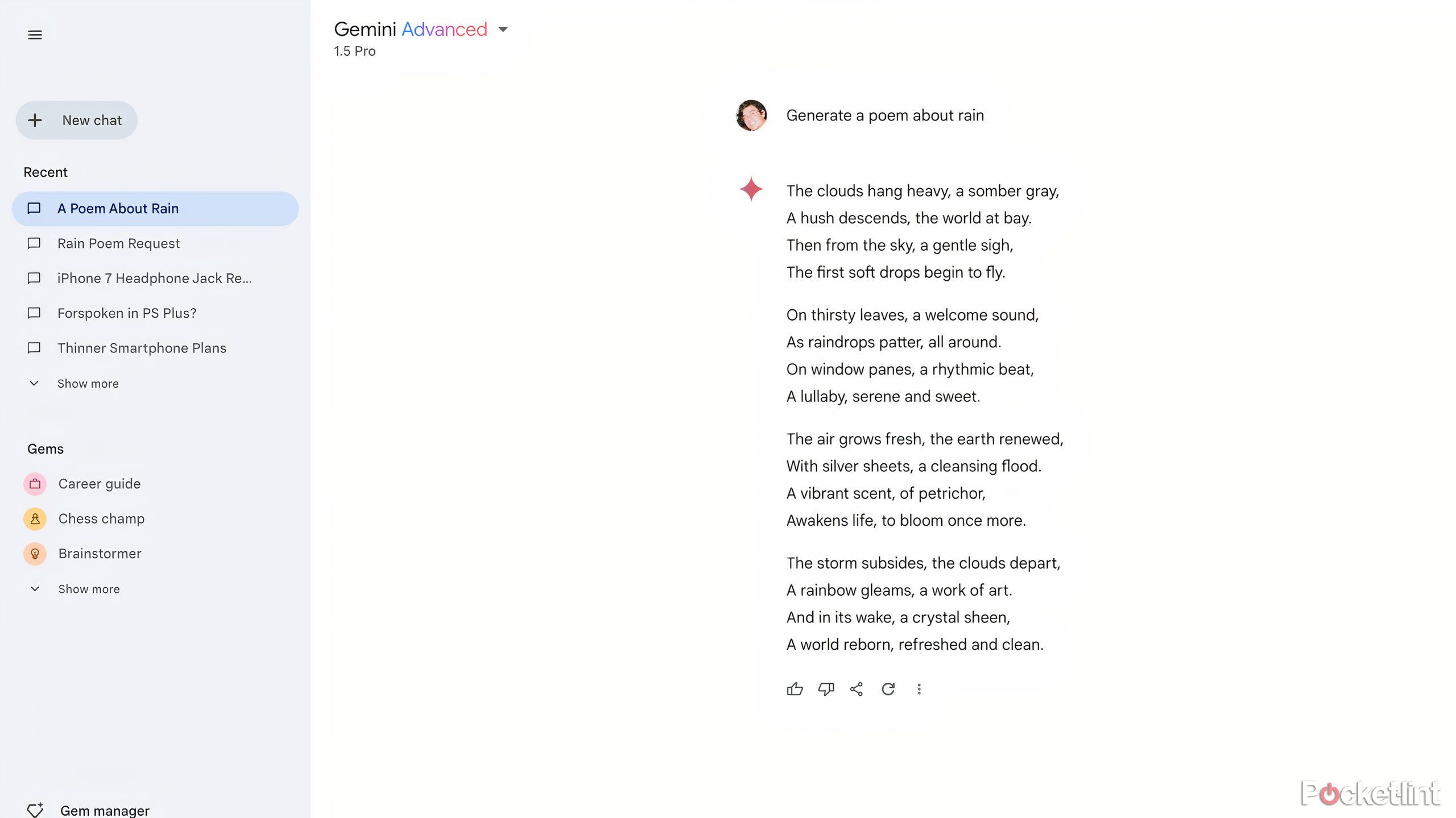

Gemini can create content anywhere there’s a text box

Gemini, is again, much more flexible than Apple’s tools and much more capable of creating nonsense. You can generate text across Google’s Workspace apps, Gmail, Google Messages, and basically any text box in Chrome. It’s able to do creative writing, like a poem, or formulaic text like a work memo, with ease. In my experience, it’s purely creative output is lacking, often feeling very cliché unless you’re willing to massage it to improve it. For a simple email response or filler text, it’s much more suitable.

I don’t think you should expect Gemini to write the first draft of your short story, but if there’s an email you can’t bring yourself to write for one reason or another — particularly anxious people might know the feeling — it’s a solid way to rip the band-aid off, provided you’re willing to double-check what the AI wrote and make sure it fits your voice.

I don’t think you should expect Gemini to write the first draft of your short story…

The ultimate issue with AI-generated text is that when it’s terrible, people can usually tell, and at least for me, not even bothering to send someone a real human message is a particular kind of rude. If you’re purely interested in being able to create the widest variety of text possible, Gemini and Apple Intelligence (with ChatGPT integration) are much more evenly matched then they would be otherwise. I found Apple Intelligence’s ability to proofread my writing to be very helpful, but that’s because it was easier to access, because it’s integrated across Apple’s operating system and not just its apps.

Related

I found the only movie subscription service actually worth paying for

Movie lovers should definitely look into this service.

Generating artificial photos and illustrations

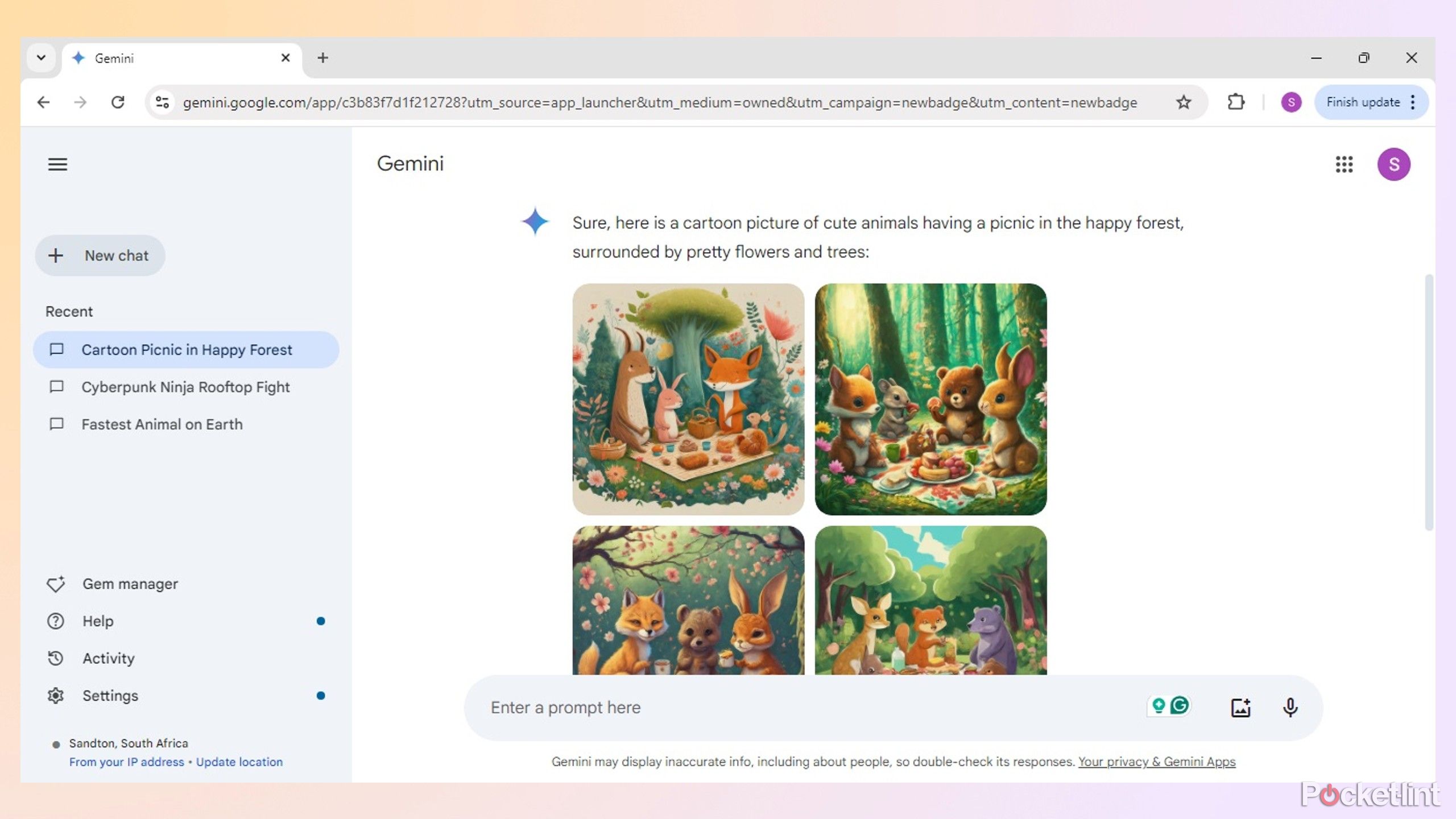

Gemini can produce photorealistic images and stylized illustrations

Gemini can produce images directly in a chat in its app or on the web using Google’s Imagen 3 model. Google designed the image generation model to understand prompts better and improve where plenty of other models struggle, like rendering legible text and producing more detailed images.

In my experience, that bears out in Gemini’s abilities. Google’s AI can create images in a wide variety of styles: hand-drawn sketches, DSLR-quality faux photos, and even quirkier things like claymation. Its photorealistic images are pretty impressive, especially if you’re interested in generating landscapes and nature shots. The more people or animals are involved, the more the quality of the Gemini’s output can vary, but it’s a pretty solid starting place.

Gemini does have limitations as part of Google’s text and image generation policies, of course. The AI is not supposed to generate dangerous, violent, sexually explicit, or harmful material. It’ll also avoid generating factually inaccurate content that could be used as misinformation. It even completely refused to create images of real people for a time. How consistently Gemini follows these rules varies, but it’s reasonably competent at making aesthetically pleasing images.

Apple Intelligence has a smaller bag of image generation tricks

Apple

Apple Intelligence’s Image Playground is built into apps like Notes and Messages and also exists as a separate app on iOS and iPadOS 18.2. Image Playground can create images from scratch based on a prompt, suggested concepts, or a photo, and offer outputs in one of two different styles. “Animation” images seem inspired by Pixar’s 3D animation, and “Illustration” images look more like hand-drawn concept art.

Image Playground currently can’t generate images in the “Sketch” style that Apple demoed earlier in 2024.

On an iPad, the Image Wand tool in the Notes app can also be used to create an image from scratch based on a prompt or the contents of a note, or convert a sketch into something a little more finished and professional. Apple Intelligence’s images are far less detailed than Gemini, and not nearly as flexible. Image Wand is a unique way of using image generation that speaks to Apple’s integration of Apple Intelligence into its various platforms, but you’re likely to get better results with Gemini. One plus of Apple Intelligence is that you can generate images without an internet connection, something you can’t say for Gemini.

Related

AI video generation just got a lot easier

OpenAI has released its Sora text-to-video AI generation tool, but its not without limitations.

Working with and analyzing existing files

Unless you’re using ChatGPT, Apple Intelligence’s hands are tied

Apple / Pocket-lint

Without relying on OpenAI’s model, Apple Intelligence has little ability to do anything with material outside of Apple’s own apps. In iOS 18.2, the Mail app can automatically sort emails into categories like Updates or Promotions, not unlike Gmail. There’s also the ability to summarize emails, messages, notifications, and audio recordings that now exists across macOS, iOS, and iPadOS. That’s really as far as Apple Intelligence goes.

By using ChatGPT integration, you can do things like analyze a document you’ve uploaded or work with specific pieces of a text. It’s the kind of thing ChatGPT is already good at, but delivered through Apple’s interface. Unlike Apple’s existing tools, it’ll require an internet connection, too.

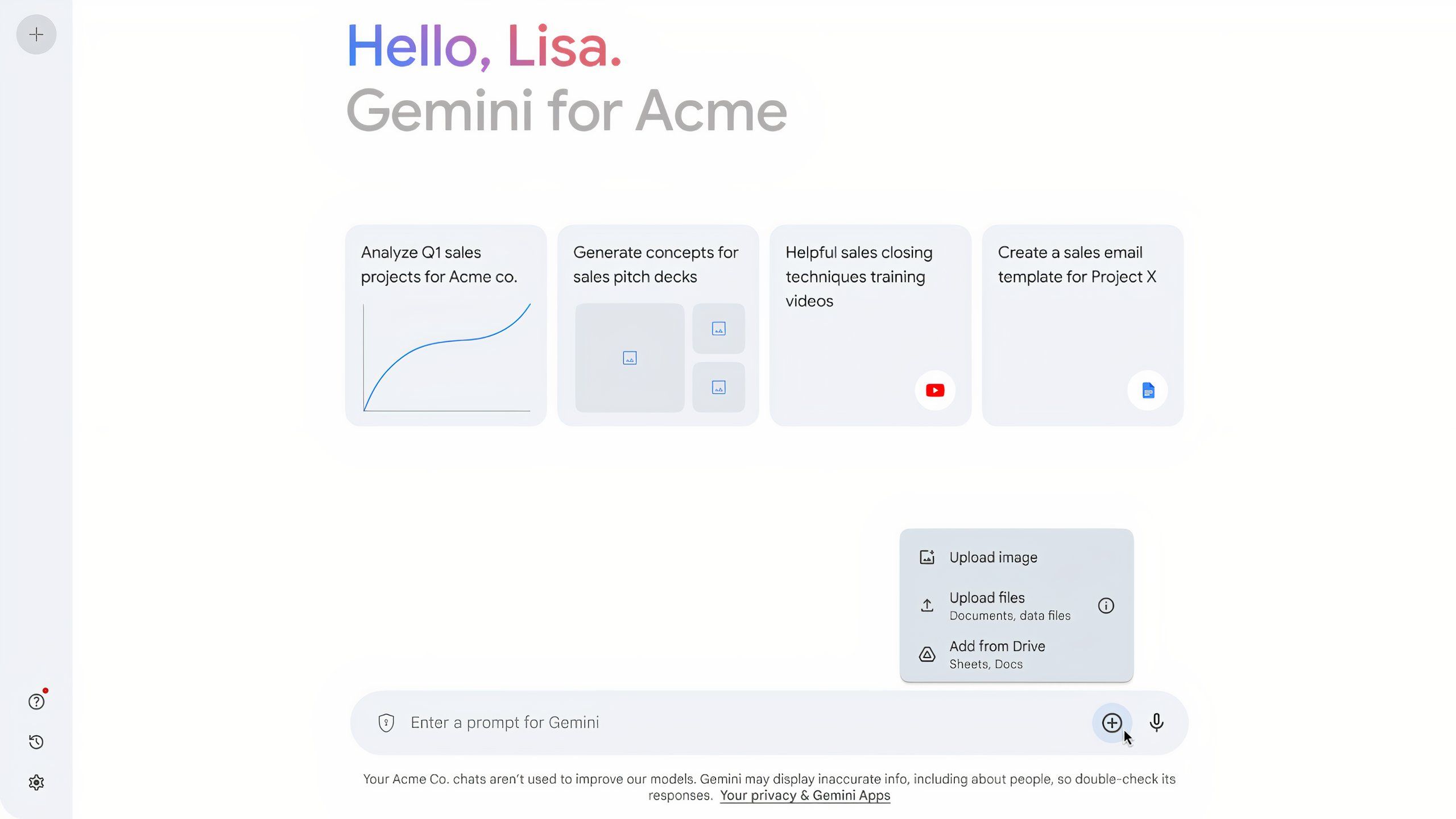

Gemini wants to gobble as many files as you’ll give it

Google / Pocket-lint

In contrast, Gemini is much more capable and willing to work with whatever files you have handy. You can upload just about any file to Gemini and have the chatbot work with it, though PDFs seem to be what Google’s model is most conversant in. You could summarize a PDF, pull data out of multiple documents and put it in a table, or even search the text for a specific mention of a topic or a quote.

You’d have to read the documents to have absolute certainty that Gemini isn’t just making things up, which eliminates some of the advantages of having an AI do this for you in the first place, but that’s par for the course for anything ChatGPT does in Apple Intelligence as well. On the whole, Apple’s on-device summarization features are far less likely to lie, though they could misrepresent things, just because they work with a lot less. Gemini can technically do more and it more closely fits with what I think people expect AI to be able to do, though.

Mobile-exclusive features for Pixel and iPhone

Gemini has replaced Google Assistant at the heart of Android

Gemini is integrated across Google’s applications and increasingly capable of acting inside of them on your phone. As of December 2024, the chatbot has also taken on many of the capabilities of Google Assistant, setting timers, playing music, and controlling settings on your smartphone. That’s on top of its more demanding ability to pull information from images or emails and turn them into calendar events and provide information about the things on your screen.

The flashiest of Gemini’s mobile features has to be Gemini Live, though. It’s essentially Google’s voice mode for Gemini, letting you have live conversations with the AI in the Gemini app if you need a hands-free way to get your questions answered. It’s on some level a party trick, but it feels impressive.

Apple Intelligence is waiting on a smarter Siri

Apple

When the updated version of Siri ships at some point in 2025, Apple Intelligence could have a revolutionary way to get things done on your phone. It’s hugely dependent on Apple getting Siri to understand what’s on your screen and developers being able to create ways for the voice assistant to be able to do things in apps. We still don’t know if it’ll work as well as Apple has described.

Visual Intelligence, a new feature of the iPhone 16 and presumably future phones with Camera Control, is a mobile-first application of Apple Intelligence’s ability to understand images instead of just text and audio. It’s capable of identifying and answering questions about plants, animals, and businesses, pulling phone numbers and emails, and translating text from the world around you. It also relies in part on Google’s and OpenAI’s models to answer more complex questions. It’s not all that different from Google Lens, but it could prove to be helpful in a pinch (it’s currently available in beta).

Related

6 ways the reMarkable tablet quietly makes my life easier

The reMarkable Paper Pro is one of the best E Ink note-taking device you can buy, but I use it for a lot more.

Which AI should you use in your life?

Gemini feels like the future, but Apple Intelligence is practical

Gemini is technically more capable than Apple Intelligence, but it’s also more obscure and harder to trust when it doesn’t show its work. Apple Intelligence really doesn’t do all that much, but its promised features and deep integration with Apple devices could make it a real winner in the long run.

I think most people don’t realistically need to rely on generative AI in their day-to-day life just yet, but if, for some reason, you do (or you’ve convinced yourself you do), Gemini gets much closer to the cutting edge of this tech. You have to be comfortable with its many drawbacks, but it really can be useful. For everyone else, Apple Intelligence is occasionally worth a try, and easy enough to ignore until an upgraded Siri starts wowing people in 2025.

Trending Products

Cooler Master MasterBox Q300L Micro-ATX Tower with Magnetic Design Dust Filter, Transparent Acrylic Side Panel…

ASUS TUF Gaming GT301 ZAKU II Edition ATX mid-Tower Compact case with Tempered Glass Side Panel, Honeycomb Front Panel…

ASUS TUF Gaming GT501 Mid-Tower Computer Case for up to EATX Motherboards with USB 3.0 Front Panel Cases GT501/GRY/WITH…

be quiet! Pure Base 500DX Black, Mid Tower ATX case, ARGB, 3 pre-installed Pure Wings 2, BGW37, tempered glass window

ASUS ROG Strix Helios GX601 White Edition RGB Mid-Tower Computer Case for ATX/EATX Motherboards with tempered glass…